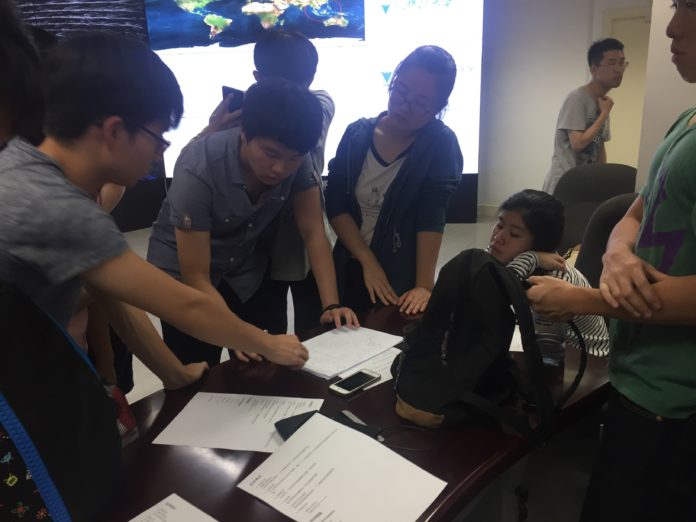

After a few weeks of learning the basics of the previously mentioned new programming languages, it’s finally time we put what we have learnt to use!

Brief Overview of what our project entails:

We are creating a webpage/app that can access the phone’s camera, which then displays the camera’s view in real-time.

In the webpage, there will be AR visualization of the person’s social relationships (friends, etc) and VR visualization of the person’s information (height, weight, etc) over the streamed camera view.

Essentially, we are creating a novel and interesting way to display information which appeals to users!

Key components of our project:

图像分割 – Being able to recognize human and objects

图像识别 – Being able to identify a person from existing database

图像配准 – Being able to register an image

AR实现 – Display person’s relationship via AR visualization

信息的可视化展示 – Display person’s information via VR visualization

可视化交互 – Visualize relationship of nearby people/object in relation to the identified person

For this week, our key goal is to use tracking.js (an open source face tracking git) to detect human faces. Some of us managed to go a step further and used it to apply a filter over the detected person’s face!

Once this is done, the next step will be to bring our project over to the mobile platform. So what is happening is that our html page will be able to access the user’s phone camera, and the feed will be displayed on PC, along with the information we have previously mentioned! That’s all for this week!