Another week has passed and it is week 11. Time sure flies when you are struggling. The more I understand about the theme project, the more difficult it seems to be. Unforeseen issues seem to pop-up out of nowhere and without resolving them, it is hard to progress with the project.

First problem was the dataset size – the total filesize for all the datasets amount to around 2.5 TB worth of storage. Without processing the files, we had no way of using them. Furthermore, since we had to run the neural network model online, the dataset size that was allowed is only up to a maximum of 10GB. We had to find ways to shrink and process the data such that it can be uploaded online while retaining crucial features.

Second problem was that there were only 20 samples of data, meaning that we had to train a model using less than 20 samples. As the model inputs have high dimensionality, it is hard to train the model from scratch with such a small sample size. Therefore, data augmentation is required. We have to find ways to augment and create additional datasets to train the model.

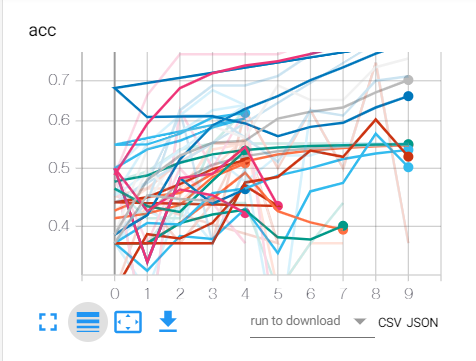

Last but not least – model architecture. Since we had no idea which type of architecture would provide the best result, we had to try a different variety of structures and layers and see which one is the most appropriate for the data. With every run, we had to tweak the number of layers, the number of nodes, the number of epochs, batch sizes… There were so many variables to change, we can only change one at a time.

The whole process took quite some time as we had to wait for the model to train before we are able to obtain the results. Hopefully, we are able to find a suitable model soon.